Klinkende Taal

Overview

As Lead AI/ML Engineer at Klinkende Taal (2024-2025), I led the development of AI systems to help Dutch government organizations communicate more clearly with citizens. The core challenge was fine-tuning large language models to produce text at B1 reading level - making official correspondence accessible to the 2.5 million Dutch adults with limited literacy. The value proposition is that citizens can understand government letters without requiring assistance, reducing miscommunication and improving democratic participation.

Technical Achievements

Model Evaluation & Selection

I designed and implemented a comprehensive evaluation system to identify the optimal base model for fine-tuning:

- Built a multi-service evaluation platform (Java/Spring Boot + Kotlin) comparing 36 LLMs across 4 providers (Mistral, OpenAI, Groq, Nebius)

- Developed an LLM-as-judge framework with 4 specialized judges evaluating data correctness, style guide compliance, quality adherence, and content rules

- Integrated the Klinkende Taal API for automated B1/B2 language complexity scoring

- Achieved 75.6% B1 compliance with Mistral-Small-24B + DPO, up from 12.4% baseline - a 63 percentage point improvement

Fine-Tuning Pipeline Development

I developed a complete ML pipeline for training language models on simplified Dutch:

- Implemented three-phase training: Domain Adaptation, Supervised Fine-Tuning (SFT), and Direct Preference Optimization (DPO)

- Built async data pipelines processing 160k+ Wikipedia sentences through B1 filtering and LLM quality assessment

- Developed curriculum learning approaches with 43k preference pairs sorted by difficulty gap

- Deployed training infrastructure on Nebius H100 80GB GPUs using Axolotl framework

- Created SGLang and vLLM inference pipelines for high-throughput model evaluation

Synthetic Data Generation

Real training data for government correspondence is privacy-sensitive, requiring synthetic alternatives:

- Built LetterProcessing system for standardizing and pseudonymizing correspondence templates

- Developed SyntheticLetters pipeline generating DPO training pairs from templates

- Created 36k combined training examples (32k sentence pairs + 5.3k letter pairs)

- Implemented quality filtering with automatic rejection of ambiguous or low-quality pairs

Human Feedback Platform

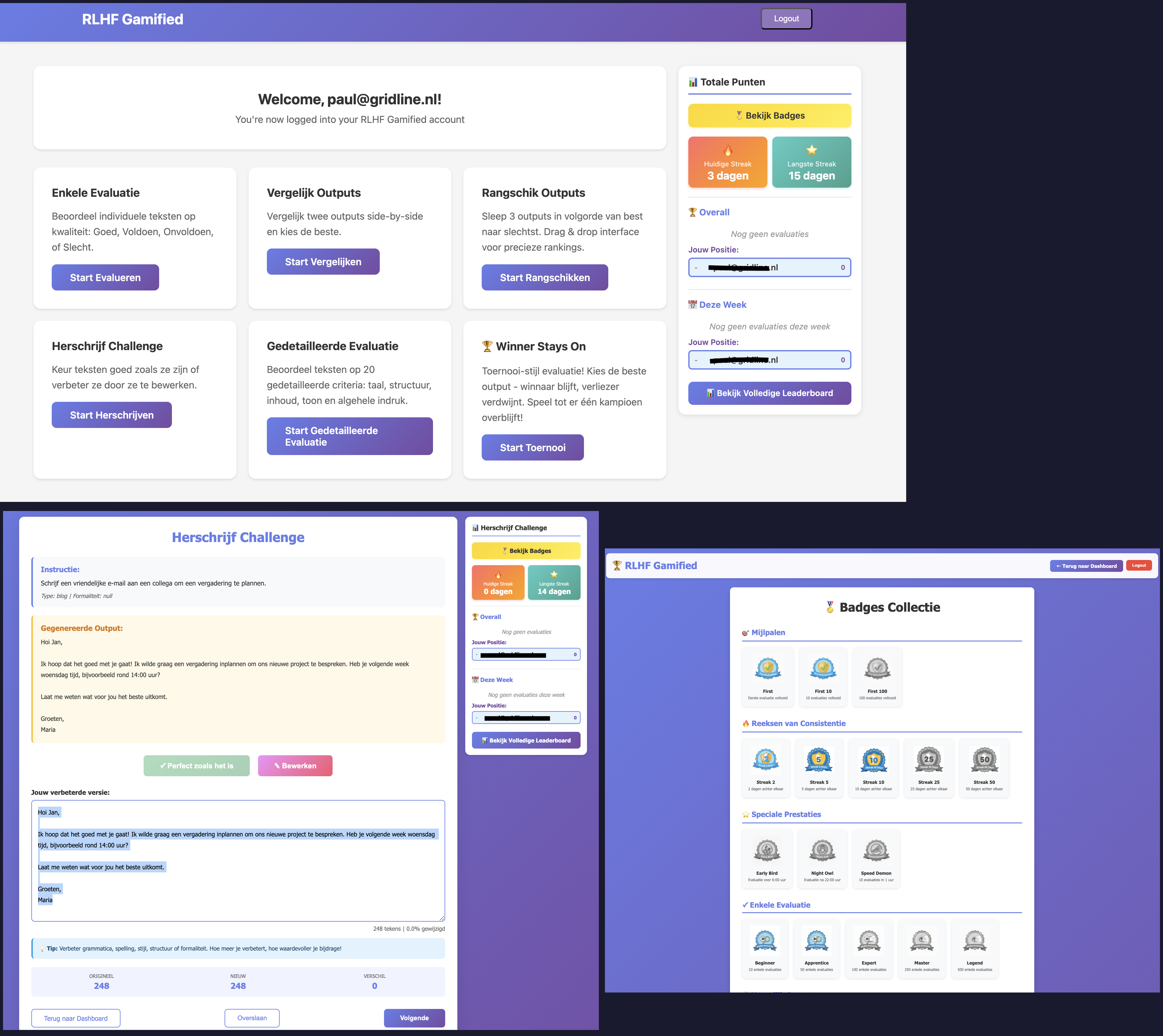

To continuously improve model outputs, I built the LveRLHF expert feedback system:

- Developed a gamified web platform for language experts to evaluate model outputs

- Implemented Kubernetes deployment with secure authentication for domain-restricted access

- Created API infrastructure for batch loading evaluation data and collecting feedback

- Deployed at expertportaal.slimtaal.nl for production use by Lve language specialists

Key Contributions

- Established the complete ML infrastructure for B1 language model development

- Identified Mistral-Small-24B as optimal base model through rigorous comparative study

- Built reusable evaluation frameworks with objective metrics for language complexity

- Created comprehensive documentation enabling knowledge transfer to the wider team

- Developed privacy-preserving synthetic data generation for sensitive government correspondence

Technologies & Skills

- Python, Java, Kotlin, Spring Boot

- PyTorch, Axolotl, vLLM, SGLang

- Direct Preference Optimization (DPO) and Supervised Fine-Tuning (SFT)

- H100 GPU training on Nebius cloud

- PostgreSQL, Flyway migrations

- LLM APIs (Mistral, OpenAI, Groq, Nebius)

- Kubernetes, Docker

- Async data processing pipelines

Making government communication accessible through AI-powered language simplification